Data integrity is integral to reproducibility.

I recently read something on an Internet web site called Facebook, it’s supposed to be quite the thing at the moment. Friend and skeptical academic James Coyne, whose fearless stabs at the methodologically pathetic and conceptually weak I much admire, instafacetweetbooked a post over at Mind the Brain, pointing to a case in post-publication peer review that made me wonder whether I was looking at serious academic discourse or toddlers in kindergarten trying to smash each other’s sand castles. James and I have co-authored a manuscript about the shortcomings in psychotherapy research which is available freely here, and I’m ashamed so say that I still haven’t met up with James in person, although he’s tried to get a hold of me more than once when he was in Amsterdam.

Anyway, point in case, during post-publication peer review where these reviewers highlighted flaws in the original analysis, the original authors had manipulated the published data to pretend the post-publication peer reviewers were a bunch of idiots who didn’t know what they’re doing. This is clearly pathetic and must have been immensely frustrating for the post-publication reviewers (it was a heroic feat in itself to be able to prove such devious manipulations in the first place, thankfully they took close note of the data set time stamps).

What can be done? Checking time stamps is trivial, but so is manipulating time stamps. My mind immediate took to what nerdy computery types like me have used for a very, very long time: file checksums. We use these things to check whether, for example, the file we just downloaded didn’t get corrupted somewhere along the sewer pipes of the Internet. Best known, probably, are MD5-hashes, a cryptographic hash of the information in a file. MD5-hases are unique: they are composed of 32 alphanumeric characters (A-Z, 0-9) which yields (26+10)^32 = 6,3340286662973277706162286946812e+49 different combinations. That’ll do nicely to catalogue all the Internet’s cat memes with unique hashes from decades past and aeons to come, and then some. So, if I were to download nyancat.png from www.nyancatmemerepository.com, I could calculate the hash of that downloaded file using, e.g., the excellent md5check.exe by Angus Johnson, which gives me a unique 32-character hash; which I could then compare with the hash as shown on www.nyancatmemerepository.com. Few things are worse than corrupted cat memes, really, but let’s consider that these hashes are equally useful to check whether a piece of, say, security software, wasn’t tampered with somewhere between the programmer’s keyboard and your hard drive – it’s the computer equivalent of putting a tiny sliver of sellotape on the cookie jar to see that nobody’s nicking your Oreos.

How can all this help us in science and the case stated above? Let’s try to corrupt some data. Let’s look at the SPSS sample data file “anticonvulsants.sav” as included in IBM SPSS21. It’s a straightforward data set looking at a multi-centre pharmacological intervention for an anticonvulsant vs. placebo, for patients followed for a number of weeks, reporting number of convulsions per patient per week as a continuous scale variable. The MD5 hash (“checksum”) for this data file is F5942356205BF75AD7EDFF103BABC6D3 as reported by md5check.exe.

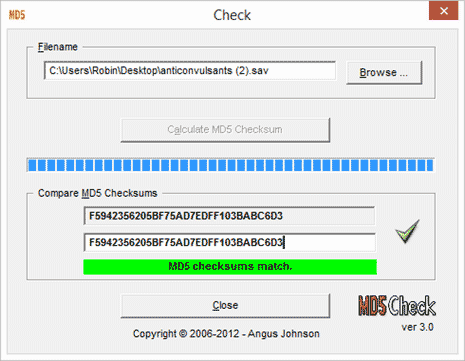

First, I duplicate the file (anticonvulsants (2).sav), and md5check.exe tells me that the checksum matches with the original [screenshot] – these files are bit-for-bit exactly the same. The more astute observer will wonder why changing the filename didn’t change the checksum (bit-for-bit, right?). Let’s not go into that much detail, but Google most assuredly is your friend if you really must know.

Now, to test the anti-tamper check, let’s say we’re being mildly optimistic about the number of convulsions that our new anticonvulsant can prevent. Let’s look at patient 1FSL from centre 07057. He’s on our swanky new anticonvulsant, and the variable ‘convulsions’ tells us he’s had 2, 6, 4, 4, 6 and 3 convulsions each week, respectively. But I’m sure the nurses didn’t mean to report that. Perhaps they mistook his spasmodic exuberance during spongey-bathtime as a convulsion? Anyway. I’m sure they meant to report 2 fewer convulsions per week as he gets the sponge twice a week, so I subtract 2 convulsions for each week, leaving us with 0, 4, 2, 2, 4 and 1 convulsions.

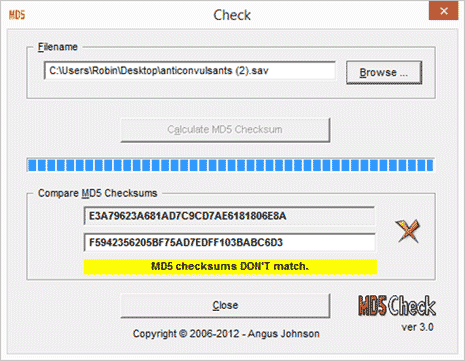

Let’s save the file and, compare checksums against the original data file.

Oh dear. The data done broke. The resulting checksum for the… enhanced dataset is E3A79623A681AD7C9CD7AE6181806E8A, which is completely different from the original checksum, which was F5942356205BF75AD7EDFF103BABC6D3 (are you convulsing yet?).

Since the MD5-hashes are unique, changing just a single bit of information in a data file compromises data integrity; and regular numbers take up more than just one bit of information. Be it data corruption or malicious intent, if there’s a mismatch in files then there’s a problem. Is this a good point to remind you that replication is a fundamental underpinning of science? Yes it is.

This was just a simple proof-of-concept and I sure this has been done before. The wealth of ‘open data’ means that data are – to both honest re-analysis and dishonest re-analysis. To ensure data-integrity, when graciously uploading raw data with a manuscript, why not include some kind of digital watermark? In this example, I’ve used the humble (and quite vulnerable) MD5-hash to show how a untampered dataset would pass the checksum test, making sure that re-analysts are all singing from the same datasheet as the original authors, to horribly butcher a metaphor. Might I suggest, “Supplement A1. Raw Data File. MD5 checksum F5942356205BF75AD7EDFF103BABC6D3”.